Diffusion Policy

Visuomotor Policy Learning via Action Diffusion

This paper introduces Diffusion Policy, a new way of generating robot behavior by representing a robot's visuomotor policy as a conditional denoising diffusion process. We benchmark Diffusion Policy across 12 different tasks from 4 different robot manipulation benchmarks and find that it consistently outperforms existing state-of-the-art robot learning methods with an average improvement of 46.9%. Diffusion Policy learns the gradient of the action-distribution score function and iteratively optimizes with respect to this gradient field during inference via a series of stochastic Langevin dynamics steps. We find that the diffusion formulation yields powerful advantages when used for robot policies, including gracefully handling multimodal action distributions, being suitable for high-dimensional action spaces, and exhibiting impressive training stability. To fully unlock the potential of diffusion models for visuomotor policy learning on physical robots, this paper presents a set of key technical contributions including the incorporation of receding horizon control, visual conditioning, and the time-series diffusion transformer. We hope this work will help motivate a new generation of policy learning techniques that are able to leverage the powerful generative modeling capabilities of diffusion models.

Highlights

Simulation Benchmarks

Diffusion Policy outperforms prior state-of-the-art on 12 tasks across 4 benchmarks with an average success-rate improvement of 46.9%. Check out our paper for further details!

Standarized simulation benchmarks are essential for this project's development.

Special shoutout to the authors of these projects for open-sourcing their simulation environments:

1 Robomimic

2 Implicit Behavior Cloning

3 Behavior Transformer

4 Relay Policy Learning

Code and Data

Paper

Robotics: Science and Systems (RSS) 2023: arXiv:2303.04137v4 [cs.RO] or here.

Diffusion Policy: Visuomotor Policy Learning via Action Diffusion

Cheng Chi, Siyuan Feng, Yilun Du, Zhenjia Xu, Eric Cousineau, Benjamin Burchfiel, Shuran Song

The International Journal of Robotics Research (IJRR) 2024: arXiv:2303.04137v5 [cs.RO] or here.

Diffusion Policy: Visuomotor Policy Learning via Action Diffusion

Cheng Chi, Zhenjia Xu, Siyuan Feng, Eric Cousineau, Yilun Du, Benjamin Burchfiel, Russ Tedrake, Shuran Song

Bibtex

@inproceedings{chi2023diffusionpolicy,

title={Diffusion Policy: Visuomotor Policy Learning via Action Diffusion},

author={Chi, Cheng and Feng, Siyuan and Du, Yilun and Xu, Zhenjia and Cousineau, Eric and Burchfiel, Benjamin and Song, Shuran},

booktitle={Proceedings of Robotics: Science and Systems (RSS)},

year={2023}

}

@article{chi2024diffusionpolicy,

author = {Cheng Chi and Zhenjia Xu and Siyuan Feng and Eric Cousineau and Yilun Du and Benjamin Burchfiel and Russ Tedrake and Shuran Song},

title ={Diffusion Policy: Visuomotor Policy Learning via Action Diffusion},

journal = {The International Journal of Robotics Research},

year = {2024},

}

Team

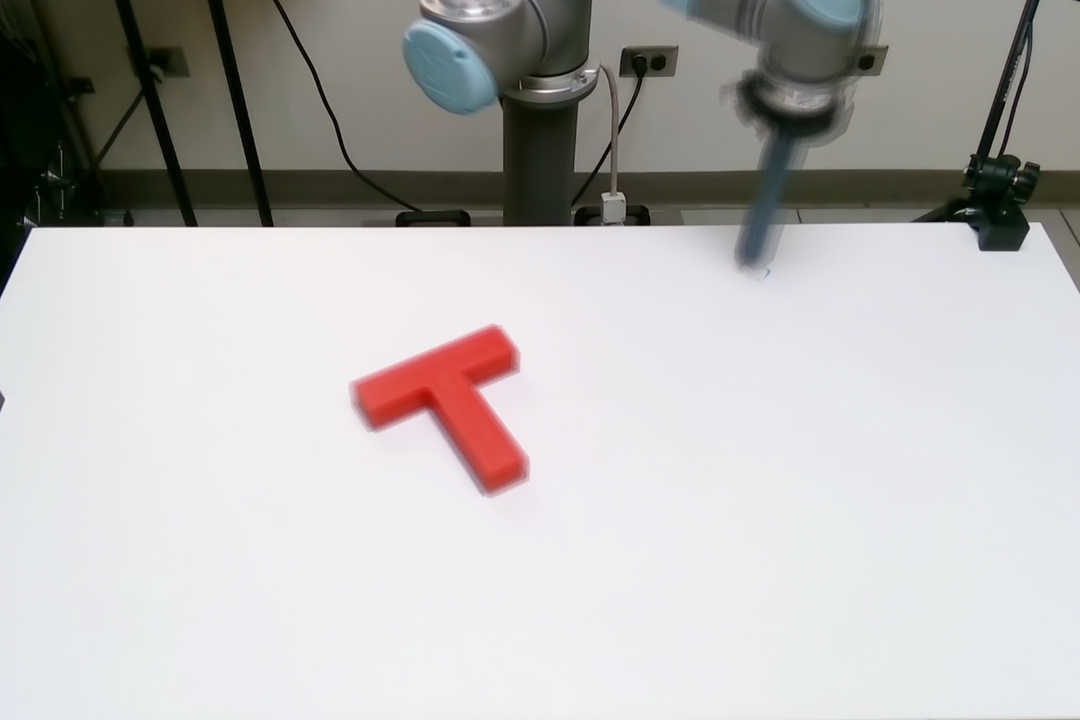

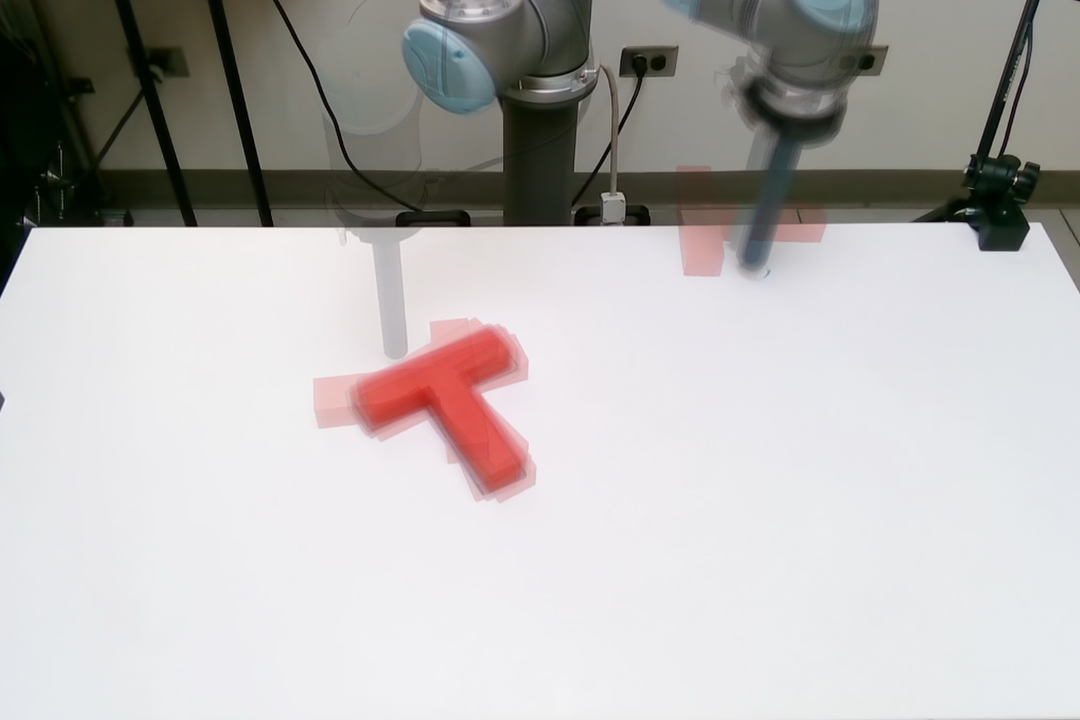

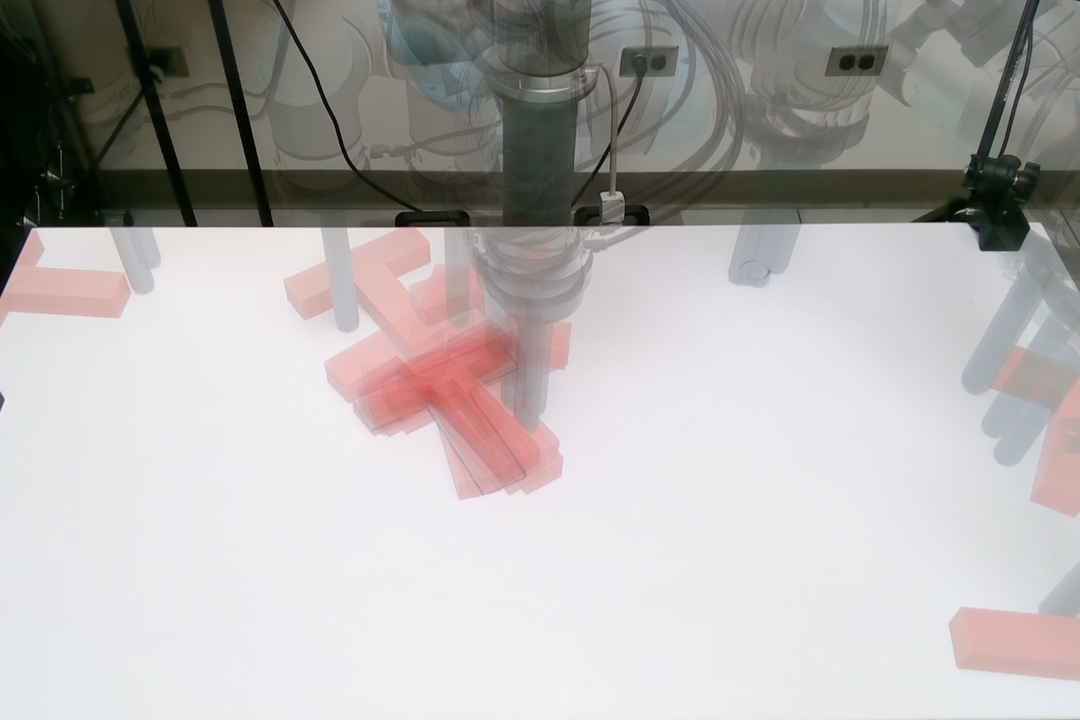

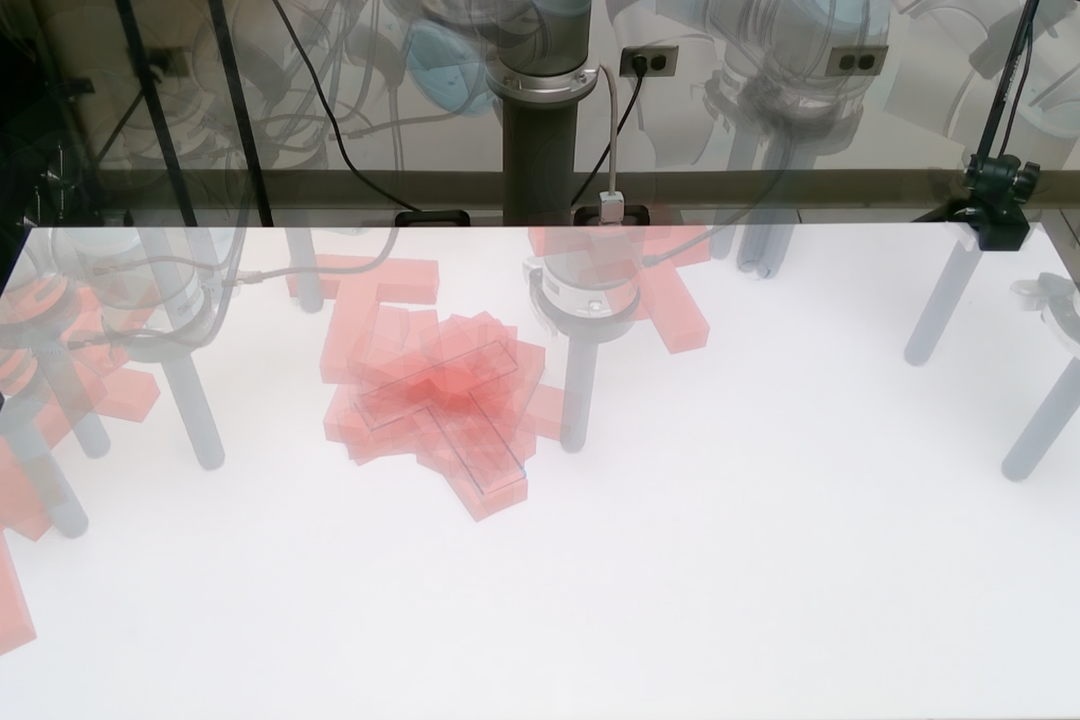

Real World Push-T Task

In this task, the robot needs to

① precisely push the T- shaped block into the target region, and

② move the end-effector to the end-zone which terminates the episode.

First row: Average of end-states for each method. Second row: Example rollout episode.

Success.

Success.

Success after stuck near the T block initially.

Success after stuck near the T block initially.

Common failure mode: stuck near the T block.

Common failure mode: stuck near the T block.

Common failure mode: entering the end-zone repmaturely.

Common failure mode: entering the end-zone repmaturely.

(0:05) Occlusion caused by waiving hand in front of the camera.

(0:11) Perturbation during pushing stage ①.

(0:39) Perturbation during finishing stage ②.

Real World Mug Flipping Task

In this task, the robot needs to

① Pickup a randomly placed mug and place it lip down (marked orange).

② Rotate the mug such that its handle is pointing left.

Realworld Sauce Pouring and Spreading Task

In the sauce pouring task, the robot needs to:

① Dip the ladle to scoop sauce from the bowl, ② approach the center of the pizza dough, ③ pour sauce, and ④ lift the ladle to finish the task.

In the sauce spreading task, the robot needs to:

① Aproach the center of the sauce with a grasped spoon ② spread the sauce to cover pizza in a spiral pattern, and ③ lift the spoon to finish the task.

Acknowledgements

This work was supported in part by NSF Awards 2037101, 2132519, 2037101, and Toyota Research Institute. We would like to thank Google for the UR5 robot hardware. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies, either expressed or implied, of the sponsors.

Contact

If you have any questions, please feel free to contact Cheng Chi